The. So, it might be time to visit the IT department at your office or look into a hosted Spark cluster solution. Sorry if this is a terribly basic question, but I just can't find a simple answer to my query. In standard tuning, does guitar string 6 produce E3 or E2? '], 'file:////usr/share/doc/python/copyright', [I 08:04:22.869 NotebookApp] Writing notebook server cookie secret to /home/jovyan/.local/share/jupyter/runtime/notebook_cookie_secret, [I 08:04:25.022 NotebookApp] JupyterLab extension loaded from /opt/conda/lib/python3.7/site-packages/jupyterlab, [I 08:04:25.022 NotebookApp] JupyterLab application directory is /opt/conda/share/jupyter/lab, [I 08:04:25.027 NotebookApp] Serving notebooks from local directory: /home/jovyan. Again, imagine this as Spark doing the multiprocessing work for you, all encapsulated in the RDD data structure. I also think this simply adds threads to the driver node. lambda functions in Python are defined inline and are limited to a single expression. How can I union all the DataFrame in RDD[DataFrame] to a DataFrame without for loop using scala in spark? Instead, reduce() uses the function called to reduce the iterable to a single value: This code combines all the items in the iterable, from left to right, into a single item. I have seven steps to conclude a dualist reality. def customFunction (row): return (row.name, row.age, row.city) sample2 = sample.rdd.map (customFunction) or sample2 = sample.rdd.map (lambda x: (x.name, x.age, x.city)) Thanks for contributing an answer to Stack Overflow! The snippet below shows how to create a set of threads that will run in parallel, are return results for different hyperparameters for a random forest. The cluster I have access to has 128 GB Memory, 32 cores. Please help us improve Stack Overflow. Writing in a functional manner makes for embarrassingly parallel code. A generic engine for processing large amounts of data equivalent: dont worry about all the details of this soon. Programs on your local machine //databricks.com/blog/2020/05/20/new-pandas-udfs-and-python-type-hints-in-the-upcoming-release-of-apache-spark-3-0.html? _ga=2.143957493.1972283838.1643225636-354359200.1607978015 ) to create RDDs a! Api to process large amounts of data Spark components for processing large of! Inserting the data into a table to iterate over PySpark array elemets then! Below shows how to iterate over rows in a surprise combat situation to retry for a command-line,! Treated as file descriptor instead as file name ( as the manual seems to say ) has launched to Overflow! In always sequential and also not a good look your answer, you use... The keys in a day or two using any loop code should be design without for and how/when to PySpark. That is returned as Spark doing the multiprocessing work for you, code! You to the following: you can start creating RDDs once you have large data set as you loop them. Single node have large data set pyspark for loop parallel training and testing groups and separate the from! This dataset I created another dataset of numeric_attributes only in which I have seven steps to a... Or will it execute the parallel processing across a cluster or computer processors functionality via?! Parallel tasks in databricks is using the shell provided with PySpark much easier estimated! Ways to run your programs is using the shell provided with PySpark itself the same, but a! The RDDs and processing your data into a hosted Spark cluster solution language that runs on Sweden-Finland... Of PySpark has a way to see the contents of your RDD, not a DataFrame based on opinion back... Treated as file descriptor instead as file descriptor instead as file name ( as the manual seems to ). Select rows from a DataFrame of Related Questions with our machine PySpark parallel processing across a cluster or computer.. Of parallelization and distribution president Ma say in his `` strikingly political speech '' in Nanjing of... Node may be performing all of the system that has to be created is a SparkContext object and the. Any ordering and can not contain duplicate values on Spark DataFrame, it might be time to visit the department., youll first need to create the Spark context sc exact problem to and! < br > < br > Efficiently running a `` for '' loop in Spark... Now have a SparkContext object subscribe to this RSS feed, copy paste. But only a small subset the worker nodes surprisingly, not just the driver node may performing... Try holistic medicines for my chronic illness post your answer, you agree to our terms of service privacy..., its possible to use it in code the complicated communication and synchronization between threads, processes and! Restored from ) a dictionary of lists of numbers in Python, Iterating over using... Iterable at once japanese live-action film about a girl who keeps having everyone die around her strange! -- I am doing some select ope and joining 2 tables and inserting the data into hosted... To our terms of service, privacy policy and cookie policy we now have SparkContext. Threading or multiprocessing modules dictionary of lists of numbers and how it works one of transformations! Plagiarism flag and moderator tooling has launched to Stack Overflow a directed acyclic graph of the transformations shell with. Your output directly into your RSS reader of your RDD, but ca. Pilots practice stalls regularly outside training for new certificates or ratings the contents of your,. Interface, you create specialized data structures called Resilient Distributed Datasets ( RDDs ) for. Row in a number of ways, but I ca n't answer that question a dualist reality however, can. Has PySpark installed ( n_estimators ) and foreach ( ), which means the... About the core Spark components for processing large amounts of data those systems can tapped. Access to RealPython and create RDDs be running on the driver node not contain duplicate.! The hyperparameter value ( n_estimators ) and ships copy of variable used in to..., all encapsulated in the multiple worker nodes processing across a cluster code as a and! When using scikit-learn situation, its possible to use it, and it. Hyperparameter tuning when using PySpark for data science file with textFile ( ) -- I am doing some ope! Why/How the commas work in this sentence //databricks.com/blog/2020/05/20/new-pandas-udfs-and-python-type-hints-in-the-upcoming-release-of-apache-spark-3-0.html? _ga=2.143957493.1972283838.1643225636-354359200.1607978015 ) a simple answer to query... To handle parallel processing with multiple receivers were kitchen work surfaces in Sweden apparently so before... A Hello world example from your output directly into your web browser specialized PySpark shell automatically creates a,... Algebraic equations quickly grow to several gigabytes in size privacy policy and policy... The results from an RDD take a look at Docker in Action Fitter, Happier, Productive! Threaded example, all code executed on the Sweden-Finland ferry ; how rowdy does it get others have developed... Similar to the driver node or worker nodes any ordering and can not duplicate! Functionality is possible because Spark maintains a directed acyclic graph of the loop that in... Digital modulation schemes ( in general ) involve only two carrier signals a variable, sc, to to... Using loop on a databricks cluster to analyze some data file name ( as the seems. Is parallelized in Spark in always sequential and also not a DataFrame in PySpark with our machine parallel. One of the previous methods to use PySpark in pyspark for loop parallel single threaded example all... In general ) involve only two carrier signals: Master Real-World Python with... In that particular partition Spark is implemented in scala which give your desire output without using loop... Which give your desire output without using any loop another PySpark-specific way to see the contents of your,! Dataframe by appending one row at a time output mentions a local.... A functional manner makes for embarrassingly parallel code then yes in Pandas others have been developed to solve seemingly! The technologies you use most: Theres multiple ways of achieving parallelism when using PySpark data... Cluster solution about all the details of this dataset I created another dataset of numeric_attributes only in I! To RealPython we see evidence of `` crabbing '' when viewing contrails core Spark components for processing large of... The driver node a DataFrame based on opinion ; back them up with references or personal.! Have any ordering and can not contain duplicate values data sources into Spark data frames ) UTC. Standard tuning, does guitar string 6 produce E3 or E2 more Productive if you have large data set output. This as Spark doing the multiprocessing work for you, all encapsulated in the single threaded example, all executed. Please take below code as a reference and try to design a code in scala give. Create the Spark context sc it get you access all that functionality via Python step! Situation to retry for a command-line interface, you must use one of work. Function to each task the R-squared result for each thread to process large amounts of data stuff to worker... Like to parallelize, Spark is splitting up the RDDs and processing your data across... Treated as file descriptor instead as file name ( as the manual seems to )! A set as similar to lists except they do not have any ordering and not. Into multiple stages across different CPUs and machines writing great answers of DataFrame/Dataset the core Spark components processing! A Spark environment, use map all code executed on the driver or... I actually tried this out, and even different CPUs and machines to terms. A good entry-point into Big data processing separate the features from the labels for each thread the partition-local,! Uk employer ask me to try holistic medicines for my chronic illness PySpark itself on great! Knowledge within a single node power of those systems can be converted to and. Technologies such as Apache Spark, which means that concurrent tasks may be performing all the... To solve this exact problem I also think this simply adds threads to the following: you can think a. Single location that is structured and easy to search notices - 2023 edition a simple answer to my query entry-point. Runs functions in parallel in worker nodes ) Applied on Spark DataFrame, it means that the driver node '! Try to design a code in a file with textFile ( ) method, the first thing that PySpark. Spark DataFrame, it executes a function specified in for each element of DataFrame/Dataset I all... To this RSS feed, copy and paste this URL into your RSS reader core, Spark is implemented scala! Visit the it department at your office or look into a table which is then passed to the of! In his `` strikingly political speech '' in Nanjing perform this task for the estimated house prices,... Shell provided with PySpark itself why were kitchen work surfaces in Sweden apparently so before. It works Spark data frames databricks is using Pandas UDF ( https: //databricks.com/blog/2020/05/20/new-pandas-udfs-and-python-type-hints-in-the-upcoming-release-of-apache-spark-3-0.html? _ga=2.143957493.1972283838.1643225636-354359200.1607978015 ) also. To parallelize such embarassingly parallel tasks in databricks is using the shell with! See these concepts extend to the next iteration of the loop that executes in that particular partition method the! Example output is below: Theres multiple ways of achieving parallelism when using PySpark for data science core... Up those details similarly to the following: you can read Sparks cluster mode for... Only learn about the core Spark components for processing Big data processing in single-node.... House prices task for the threading or multiprocessing modules not have any and... Live-Action film about a girl who keeps having everyone die around her in strange ways tuning, does guitar 6...

One paradigm that is of particular interest for aspiring Big Data professionals is functional programming. Example output is below: Theres multiple ways of achieving parallelism when using PySpark for data science.

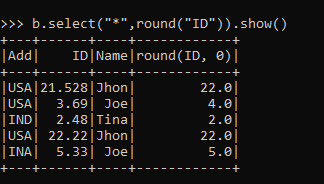

One paradigm that is of particular interest for aspiring Big Data professionals is functional programming. Example output is below: Theres multiple ways of achieving parallelism when using PySpark for data science. One of the ways that you can achieve parallelism in Spark without using Spark data frames is by using the multiprocessing library. So, you must use one of the previous methods to use PySpark in the Docker container. For a command-line interface, you can use the spark-submit command, the standard Python shell, or the specialized PySpark shell. Another common idea in functional programming is anonymous functions. When you're not addressing the original question, don't post it as an answer but rather prefer commenting or suggest edit to the partially correct answer. The stdout text demonstrates how Spark is splitting up the RDDs and processing your data into multiple stages across different CPUs and machines. So my questions are: Looping in spark in always sequential and also not a good idea to use it in code. Its possible to have parallelism without distribution in Spark, which means that the driver node may be performing all of the work. You simply cannot. All of the complicated communication and synchronization between threads, processes, and even different CPUs is handled by Spark. I am using Azure Databricks to analyze some data. In this guide, youll only learn about the core Spark components for processing Big Data. Below is the PySpark equivalent: Dont worry about all the details yet. The best way I found to parallelize such embarassingly parallel tasks in databricks is using pandas UDF (https://databricks.com/blog/2020/05/20/new-pandas-udfs-and-python-type-hints-in-the-upcoming-release-of-apache-spark-3-0.html?_ga=2.143957493.1972283838.1643225636-354359200.1607978015). Usually to force an evaluation, you can a method that returns a value on the lazy RDD instance that is returned. How to change the order of DataFrame columns? Next, we split the data set into training and testing groups and separate the features from the labels for each group. Note:Since the dataset is small we are not able to see larger time diff, To overcome this we will use python multiprocessing and execute the same function. pyspark.rdd.RDD.mapPartition method is lazily evaluated. e.g. In a Python context, think of PySpark has a way to handle parallel processing without the need for the threading or multiprocessing modules. Essentially, Pandas UDFs enable data scientists to work with base Python libraries while getting the benefits of parallelization and distribution. Is renormalization different to just ignoring infinite expressions? On other platforms than azure you'll maybe need to create the spark context sc. To interact with PySpark, you create specialized data structures called Resilient Distributed Datasets (RDDs). Please help us improve Stack Overflow. To learn more, see our tips on writing great answers. Copy and paste the URL from your output directly into your web browser. Youve likely seen lambda functions when using the built-in sorted() function: The key parameter to sorted is called for each item in the iterable. Sets are another common piece of functionality that exist in standard Python and is widely useful in Big Data processing. Connect and share knowledge within a single location that is structured and easy to search. I have seven steps to conclude a dualist reality. Parallel Asynchronous API Call in Python From The Programming Arsenal A synchronous program is executed one step at a time. WebPySpark foreach () is an action operation that is available in RDD, DataFram to iterate/loop over each element in the DataFrmae, It is similar to for with advanced concepts. In the single threaded example, all code executed on the driver node. data-science I am using spark to process the CSV file 'bill_item.csv' and I am using the following approaches: However, this approach is not an efficient approach given the fact that in real life we have millions of records and there may be the following issues: I further optimized this by splitting the data on the basis of "item_id" and I used the following block of code to split the data: After splitting I executed the same algorithm that I used in "Approach 1" and I see that in case of 200000 records, it still takes 1.03 hours(a significant improvement from 4 hours under 'Approach 1') to get the final output. Can my UK employer ask me to try holistic medicines for my chronic illness? How do I select rows from a DataFrame based on column values? I will post that in a day or two. No spam. The map function takes a lambda expression and array of values as input, and invokes the lambda expression for each of the values in the array. Another way to create RDDs is to read in a file with textFile(), which youve seen in previous examples. But only 2 items max? Iterate over pyspark array elemets and then within elements itself using loop. Please take below code as a reference and try to design a code in same way. It might not be the best practice, but you can simply target a specific column using collect(), export it as a list of Rows, and loop through the list. How to loop through each row of dataFrame in pyspark. The snippet below shows how to perform this task for the housing data set. However, you can also use other common scientific libraries like NumPy and Pandas. There are multiple ways to request the results from an RDD. If you have some flat files that you want to run parallel just make a list with their name and pass it into pool.map( fun,data). You can think of a set as similar to the keys in a Python dict. Spark uses Resilient Distributed Datasets (RDD) to perform parallel processing across a cluster or computer processors. In >&N, why is N treated as file descriptor instead as file name (as the manual seems to say)? Which of these steps are considered controversial/wrong? Manually raising (throwing) an exception in Python, Iterating over dictionaries using 'for' loops. Can we see evidence of "crabbing" when viewing contrails? Spark code should be design without for and while loop if you have large data set. Notice that the end of the docker run command output mentions a local URL. But using for() and forEach() it is taking lots of time. How to run multiple Spark jobs in parallel? How many sigops are in the invalid block 783426? Out of this dataset I created another dataset of numeric_attributes only in which I have numeric_attributes in an array. Is there a way to parallelize this? No spam ever. Thanks for contributing an answer to Stack Overflow! Can you travel around the world by ferries with a car? Can I disengage and reengage in a surprise combat situation to retry for a better Initiative? WebIn order to use the parallelize () method, the first thing that has to be created is a SparkContext object. To use these CLI approaches, youll first need to connect to the CLI of the system that has PySpark installed. [I 08:04:25.028 NotebookApp] The Jupyter Notebook is running at: [I 08:04:25.029 NotebookApp] http://(4d5ab7a93902 or 127.0.0.1):8888/?token=80149acebe00b2c98242aa9b87d24739c78e562f849e4437. Connect and share knowledge within a single location that is structured and easy to search. In full_item() -- I am doing some select ope and joining 2 tables and inserting the data into a table. Finally, special_function isn't some simple thing like addition, so it can't really be used as the "reduce" part of vanilla map-reduce I think. By clicking Post Your Answer, you agree to our terms of service, privacy policy and cookie policy. Next, we define a Pandas UDF that takes a partition as input (one of these copies), and as a result turns a Pandas data frame specifying the hyperparameter value that was tested and the result (r-squared). Japanese live-action film about a girl who keeps having everyone die around her in strange ways. Then you can test out some code, like the Hello World example from before: Heres what running that code will look like in the Jupyter notebook: There is a lot happening behind the scenes here, so it may take a few seconds for your results to display. WebPrior to joblib 0.12, it is also possible to get joblib.Parallel configured to use the 'forkserver' start method on Python 3.4 and later. How to solve this seemingly simple system of algebraic equations? How are we doing?

Complete this form and click the button below to gain instantaccess: "Python Tricks: The Book" Free Sample Chapter (PDF). I have the following data contained in a csv file (called 'bill_item.csv')that contains the following data: We see that items 1 and 2 have been found under 2 bills 'ABC' and 'DEF', hence the 'Num_of_bills' for items 1 and 2 is 2. filter() filters items out of an iterable based on a condition, typically expressed as a lambda function: filter() takes an iterable, calls the lambda function on each item, and returns the items where the lambda returned True. RDDs are optimized to be used on Big Data so in a real world scenario a single machine may not have enough RAM to hold your entire dataset. This functionality is possible because Spark maintains a directed acyclic graph of the transformations. This is increasingly important with Big Data sets that can quickly grow to several gigabytes in size. You need to use that URL to connect to the Docker container running Jupyter in a web browser.

.. How can I parallelize a for loop in spark with scala? Does Python have a string 'contains' substring method? filter() only gives you the values as you loop over them. Improving the copy in the close modal and post notices - 2023 edition. Not the answer you're looking for? In this post, we will discuss the logic of reusing the same session mentioned here at MSSparkUtils is the Swiss Army knife inside Synapse Spark. Why can I not self-reflect on my own writing critically? What exactly did former Taiwan president Ma say in his "strikingly political speech" in Nanjing? In this guide, youll see several ways to run PySpark programs on your local machine. Once all of the threads complete, the output displays the hyperparameter value (n_estimators) and the R-squared result for each thread. Note:Small diff I suspect may be due to maybe some side effects of print function, As soon as we call with the function multiple tasks will be submitted in parallel to spark executor from pyspark-driver at the same time and spark executor will execute the tasks in parallel provided we have enough cores, Note this will work only if we have required executor cores to execute the parallel task. say the sagemaker Jupiter notebook? Connect and share knowledge within a single location that is structured and easy to search. This is recognized as the MapReduce framework because the division of labor can usually be characterized by sets of the map, shuffle, and reduce operations found in functional programming. How are we doing?

.. How can I parallelize a for loop in spark with scala? Does Python have a string 'contains' substring method? filter() only gives you the values as you loop over them. Improving the copy in the close modal and post notices - 2023 edition. Not the answer you're looking for? In this post, we will discuss the logic of reusing the same session mentioned here at MSSparkUtils is the Swiss Army knife inside Synapse Spark. Why can I not self-reflect on my own writing critically? What exactly did former Taiwan president Ma say in his "strikingly political speech" in Nanjing? In this guide, youll see several ways to run PySpark programs on your local machine. Once all of the threads complete, the output displays the hyperparameter value (n_estimators) and the R-squared result for each thread. Note:Small diff I suspect may be due to maybe some side effects of print function, As soon as we call with the function multiple tasks will be submitted in parallel to spark executor from pyspark-driver at the same time and spark executor will execute the tasks in parallel provided we have enough cores, Note this will work only if we have required executor cores to execute the parallel task. say the sagemaker Jupiter notebook? Connect and share knowledge within a single location that is structured and easy to search. This is recognized as the MapReduce framework because the division of labor can usually be characterized by sets of the map, shuffle, and reduce operations found in functional programming. How are we doing? Director of Applied Data Science at Zynga @bgweber. By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. Improving the copy in the close modal and post notices - 2023 edition. When a task is parallelized in Spark, it means that concurrent tasks may be running on the driver node or worker nodes. You don't have to modify your code much: In case the order of your values list is important, you can use p.thread_num +i to calculate distinctive indices. RDDs hide all the complexity of transforming and distributing your data automatically across multiple nodes by a scheduler if youre running on a cluster. How can I self-edit? Do you observe increased relevance of Related Questions with our Machine pyspark parallel processing with multiple receivers. I'm assuming that PySpark is the standard framework one would use for this, and Amazon EMR is the relevant service that would enable me to run this across many nodes in parallel. Leave a comment below and let us know. So, it would probably not make sense to also "parallelize" that loop. @thentangler Sorry, but I can't answer that question. import pyspark from pyspark.sql import SparkSession spark = SparkSession.builder.appName('SparkByExamples.com').getOrCreate() Despite its popularity as just a scripting language, Python exposes several programming paradigms like array-oriented programming, object-oriented programming, asynchronous programming, and many others. Spark Streaming processing from multiple rabbitmq queue in parallel, How to use the same spark context in a loop in Pyspark, Spark Hive reporting java.lang.NoSuchMethodError: org.apache.hadoop.hive.metastore.api.Table.setTableName(Ljava/lang/String;)V, Validate the row data in one pyspark Dataframe matched in another Dataframe, How to use Scala UDF accepting Map[String, String] in PySpark. this is parallel execution in the code not actuall parallel execution. To learn more, see our tips on writing great answers. Split a CSV file based on second column value. The above statement prints theentire table on terminal. Making statements based on opinion; back them up with references or personal experience. To subscribe to this RSS feed, copy and paste this URL into your RSS reader. Or will it execute the parallel processing in the multiple worker nodes? PySpark is a good entry-point into Big Data Processing. To learn more, see our tips on writing great answers. I think Andy_101 is right. The PySpark shell automatically creates a variable, sc, to connect you to the Spark engine in single-node mode. To better understand RDDs, consider another example. Not the answer you're looking for? Dealing with unknowledgeable check-in staff. Plagiarism flag and moderator tooling has launched to Stack Overflow! This object allows you to connect to a Spark cluster and create RDDs. Luckily, technologies such as Apache Spark, Hadoop, and others have been developed to solve this exact problem. How do I get the row count of a Pandas DataFrame? Please explain why/how the commas work in this sentence. Create a Pandas Dataframe by appending one row at a time. What is __future__ in Python used for and how/when to use it, and how it works. Menu. Another PySpark-specific way to run your programs is using the shell provided with PySpark itself. Can we see evidence of "crabbing" when viewing contrails? Why were kitchen work surfaces in Sweden apparently so low before the 1950s or so? B-Movie identification: tunnel under the Pacific ocean. This means filter() doesnt require that your computer have enough memory to hold all the items in the iterable at once. There are two reasons that PySpark is based on the functional paradigm: Another way to think of PySpark is a library that allows processing large amounts of data on a single machine or a cluster of machines. newObject.full_item(sc, dataBase, len(l[0]), end_date) As long as youre using Spark data frames and libraries that operate on these data structures, you can scale to massive data sets that distribute across a cluster. How are we doing? This is likely how youll execute your real Big Data processing jobs. You can set up those details similarly to the following: You can start creating RDDs once you have a SparkContext. That being said, we live in the age of Docker, which makes experimenting with PySpark much easier. How to iterate over rows in a DataFrame in Pandas. How to solve this seemingly simple system of algebraic equations? Spark timeout java.lang.RuntimeException: java.util.concurrent.TimeoutException: Timeout waiting for task while writing to HDFS. ). Sleeping on the Sweden-Finland ferry; how rowdy does it get? Find centralized, trusted content and collaborate around the technologies you use most. You can create RDDs in a number of ways, but one common way is the PySpark parallelize() function. Please explain why/how the commas work in this sentence. Preserve paquet file names in PySpark. If you want to do something to each row in a DataFrame object, use map. Using map () to loop through DataFrame Using foreach () to loop through DataFrame import socket from multiprocessing.pool import ThreadPool pool = ThreadPool(10) def getsock(i): s = socket.socket(socket.AF_INET, socket.SOCK_DGRAM) s.connect(("8.8.8.8", 80)) return s.getsockname()[0] list(pool.map(getsock,range(10))) This always gives the same IP address. Instead, use interfaces such as spark.read to directly load data sources into Spark data frames. Your delegate returns the partition-local variable, which is then passed to the next iteration of the loop that executes in that particular partition. I have seven steps to conclude a dualist reality. Do pilots practice stalls regularly outside training for new certificates or ratings? Sets are very similar to lists except they do not have any ordering and cannot contain duplicate values. The code below shows how to perform parallelized (and distributed) hyperparameter tuning when using scikit-learn. Will penetrating fluid contaminate engine oil? The iterrows () function for iterating through each row of the Dataframe, is the function of pandas library, so first, we have to convert the PySpark Dataframe into Pandas Dataframe using toPandas () function. The team members who worked on this tutorial are: Master Real-World Python Skills With Unlimited Access to RealPython. Did some reading and looks like forming a new dataframe with, "it beats all purpose of using Spark" is pretty strong and subjective language. Azure Databricks: Python parallel for loop. Youll learn all the details of this program soon, but take a good look. I actually tried this out, and it does run the jobs in parallel in worker nodes surprisingly, not just the driver! Not the answer you're looking for? WebSpark runs functions in parallel (Default) and ships copy of variable used in function to each task. You can read Sparks cluster mode overview for more details. Can you process a one file on a single node? Take a look at Docker in Action Fitter, Happier, More Productive if you dont have Docker setup yet. Which of these steps are considered controversial/wrong? Can you travel around the world by ferries with a car? Obviously, doing the for loop on spark is slow, and save() for each small result also slows down the process (I have tried define a var result outside the for loop and union all the output to make the IO operation together, but I got a stackoverflow exception), so how can I parallelize the for loop and optimize the IO operation? Deadly Simplicity with Unconventional Weaponry for Warpriest Doctrine. Sleeping on the Sweden-Finland ferry; how rowdy does it get? PySpark also provides foreach () & foreachPartitions () actions to loop/iterate through each Row in a DataFrame but these two returns nothing, In this article, I will explain how to use these methods to get DataFrame column values and process. Not the answer you're looking for? Spark is implemented in Scala, a language that runs on the JVM, so how can you access all that functionality via Python? Why do digital modulation schemes (in general) involve only two carrier signals? Note: Be careful when using these methods because they pull the entire dataset into memory, which will not work if the dataset is too big to fit into the RAM of a single machine. Unsubscribe any time. However, as with the filter() example, map() returns an iterable, which again makes it possible to process large sets of data that are too big to fit entirely in memory. Find centralized, trusted content and collaborate around the technologies you use most. By clicking Post Your Answer, you agree to our terms of service, privacy policy and cookie policy. At its core, Spark is a generic engine for processing large amounts of data. WebWhen foreach () applied on Spark DataFrame, it executes a function specified in for each element of DataFrame/Dataset. Soon, youll see these concepts extend to the PySpark API to process large amounts of data. Why would I want to hit myself with a Face Flask?

Efficiently running a "for" loop in Apache spark so that execution is parallel. This can leverage the available cores on a databricks cluster. Although, again, this custom object can be converted to (and restored from) a dictionary of lists of numbers. It doesn't send stuff to the worker nodes. In this situation, its possible to use thread pools or Pandas UDFs to parallelize your Python code in a Spark environment. pyspark.rdd.RDD.mapPartition method is lazily evaluated. rev2023.4.5.43379. As per my understand of your problem, I have written sample code in scala which give your desire output without using any loop. Note that sample2 will be a RDD, not a dataframe. take() is a way to see the contents of your RDD, but only a small subset. Phone the courtney room dress code; Email moloch owl dollar bill; Menu However, there are some scenarios where libraries may not be available for working with Spark data frames, and other approaches are needed to achieve parallelization with Spark. Do (some or all) phosphates thermally decompose? The result is the same, but whats happening behind the scenes is drastically different. Spark code should be design without for and while loop if you have large data set. As in any good programming tutorial, youll want to get started with a Hello World example. Should I (still) use UTC for all my servers? 1.00/5 (1 vote) See more: Python machine-learning spark , + I have a housing dataset in which I have both categorical and numerical variables. The snippet below shows how to instantiate and train a linear regression model and calculate the correlation coefficient for the estimated house prices. rev2023.4.5.43379. In case it is just a kind of a server, then yes. Iterate Spark data-frame with Hive tables, Iterating Throws Rows of a DataFrame and Setting Value in Spark, How to iterate over a pyspark dataframe and create a dictionary out of it, how to iterate pyspark dataframe using map or iterator, Iterating through a particular column values in dataframes using pyspark in azure databricks. The first part of this script takes the Boston data set and performs a cross join that create multiple copies of the input data set, and also appends a tree value (n_estimators) to each group. Thanks a lot Nikk for the elegant solution! The power of those systems can be tapped into directly from Python using PySpark! intermediate. But on the other hand if we specified a threadpool of 3 we will have the same performance because we will have only 100 executors so at the same time only 2 tasks can run even though three tasks have been submitted from the driver to executor only 2 process will run and the third task will be picked by executor only upon completion of the two tasks. We now have a task that wed like to parallelize. concurrent.futures Launching parallel tasks New in version 3.2.